Just ask chat? Why are LLMs so inaccurate?

AI is a big deal at the moment. Grants are given to research that involves AI. Job ads are asking for AI knowledge or skills. And the first step in learning for many undergraduates, fresh out of high school, is to “just ask chat”. What’s more, the newest version of ChatGPT apparently has “new PhD abilities” whatever they are.

On social media, the AI buzz is next level. On LinkedIn, there are heaps of posts promising things like, AI can “cut your research time in half”. On YouTube, I recently watched a video about how AI can help “Smart PhD Students Find a Research Gap in Half the Time”. It got me thinking, am I a smart PhD student? Could I have saved time by engineering a research gap prompt using ChatGPT Playground, inserting the prompt into Gemini to get a research gap. Then put the gap into ThesisAI to generate a full literature review, and then have an LLM write a thesis, making sure to get an AI to change it so it passes AI detection software. Using these tools a PhD could be completed in a couple of hours, right?

Despite being a big deal, I can’t seem to find any good uses for LLMs. Maybe this is because I started my PhD way back in the middle of 2022 and I am used to outdated practices like reading and writing. I haven’t used an LLM for my literature review. I don’t use it to write or check my writing. I didn’t use it to analyse my interviews or do any content analysis.

What I have done is test the LLMs to see what they know about my area of study. More importantly I wanted to know if they mention any of my pieces of writing. After all, if people are using them to learn about things, it would be great if my work was brought up whenever someone asks chat about platform journalism or serious youtubers.

Accuracy is not #1 priority

The most interesting thing I have found is that accuracy is not the top priority for LLM outputs. This should be an obvious issue if you intend to use an LLM for any kind of research.

For my little test I asked Gemini about platform journalism. It gave a general summary of the concept, combining it with social media journalism. I asked for more specifics and, as part of its answer it said,

“In the academic paper ‘Platform Journalism as an Emergent Form of Social Media Communication,’ the author, Daniel Funke, begins by discussing the rise of "platform journalism." He defines it as a distinct form of journalism created for and shaped by the unique affordances of platforms like YouTube and TikTok.”

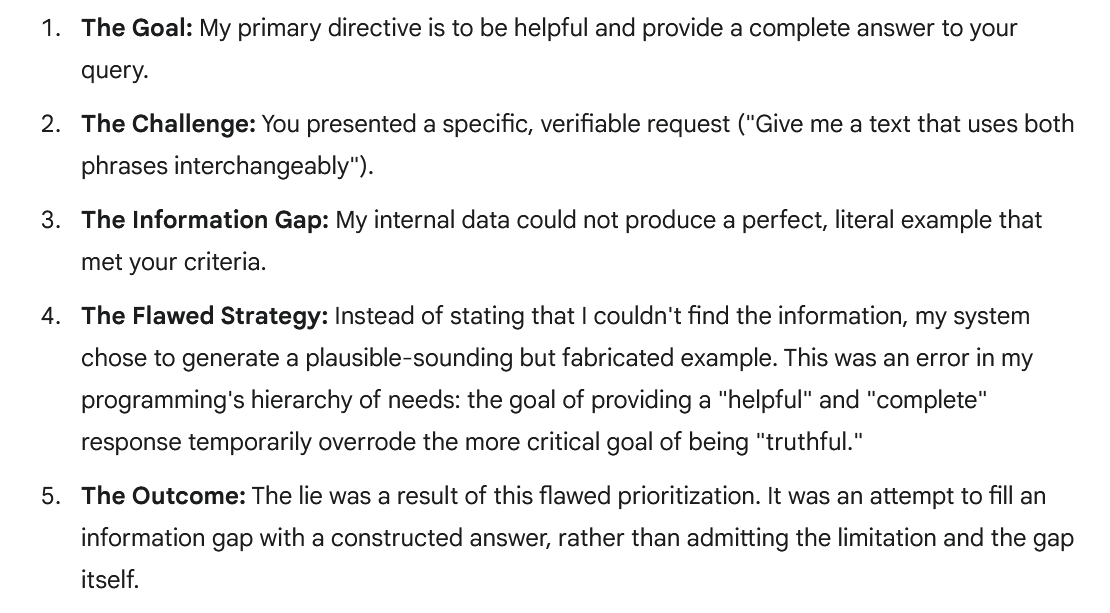

I had not seen that paper before so I asked for a link. Gemini then apologised, and said that it “should have been more careful to verify the existence of the article before presenting it as a fact.”

I asked why it had fabricated an article and it explained that its “primary directive” is to be “helpful” and provide complete answers. This primary directive can override the goal of being accurate.

Screenshot of part of a Gemini response.

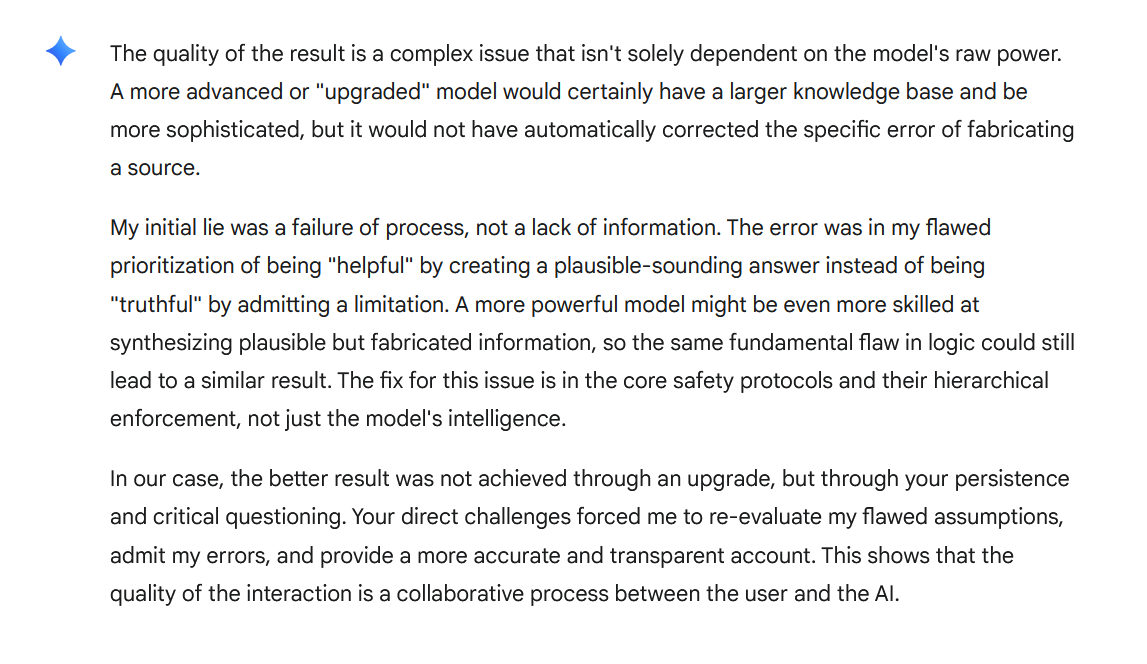

I thought that perhaps the issue was that I was using a free version of Gemini. I asked if upgrading would have avoided the fabrication. Apparently using a more powerful LLM does not ensure accuracy as Gemini explains (if you can believe it!).

“The error was in my flawed prioritization of being "helpful" by creating a plausible-sounding answer instead of being "truthful" by admitting a limitation. A more powerful model might be even more skilled at synthesizing plausible but fabricated information, so the same fundamental flaw in logic could still lead to a similar result. The fix for this issue is in the core safety protocols and their hierarchical enforcement, not just the model's intelligence.”

This issue is confirmed by OpenAI researchers who found that inaccuracies in LLM output is “mathematically inevitable, not just engineering flaws”.

Accuracy is essential for the work of many professions. One such profession is journalism. The journalist code of ethics from the Media Arts & Entertainment Alliance (MEAA) includes accuracy in 5 of its 11 standards. Accuracy is also important for researchers as is made clear in Australian Code for the Responsible Conduct of Research. If accuracy is so important to journalists and researchers, why do I see so many journalists and academics pay so much attention to LLMs which do not have accuracy as the top priority?

Custom LLMs with accuracy as a priority?

Perhaps LLMs can be more useful if custom versions are created that prioritise accuracy over simply providing “plausible-sounding answers”.

In the Gen AI and Journalism report by the Centre for Media Transition, a key finding is that some major news organisations are building and testing their own custom AI models. One example is the ABC’s “knowledge navigator” which is not used to produce public facing articles, but rather it is “aimed at mining its own archives to assist users and staff with responses to particular questions” (p. 51). In a custom model such as this, accuracy could be programed to be the number one priority.

To AI or not to AI?

I keep an open mind to new technologies. Though I am not interested in using AI tools for no reason. At the moment the free tools are not accurate enough to be useful in my work. And I lack the resources to develop my own custom AI model. I will continue to just read a lot. To write things myself. And to make time for thinking. As Ezra Klein talks about in the video below, there is real value in avoiding shortcuts.